Making Things Worse Pays Off Now. Cory Doctorow Explains Why.

Bioneers | Published: January 5, 2026 Eco-NomicsGreen BusinessMedia Article

When Cory Doctorow spoke at Bioneers in 2017, the warning signs were already there. But in the years since, the tech landscape has become more extractive and more brittle at the same time — less accountable, harder to leave, and easier to break.

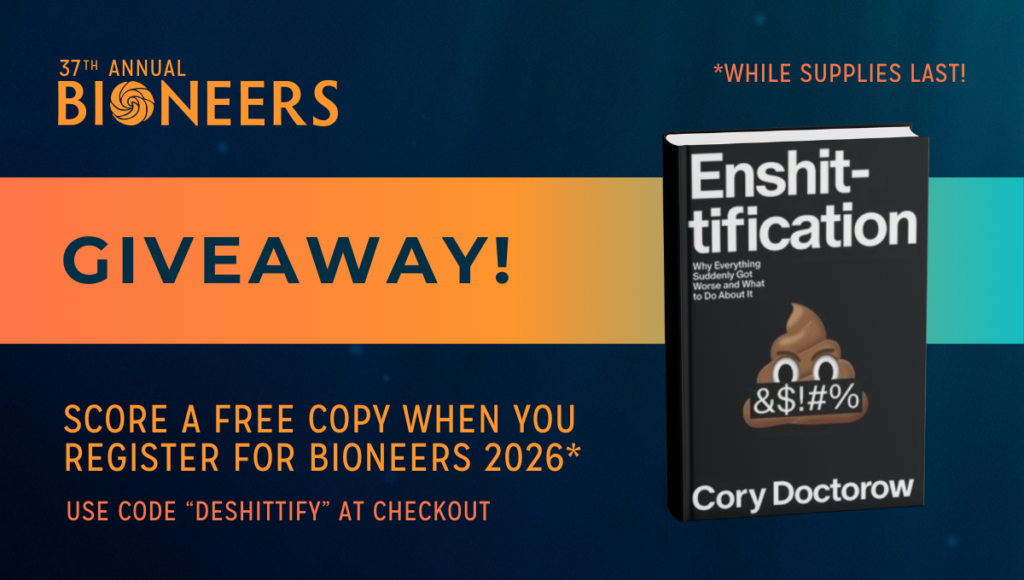

Doctorow has spent decades thinking and writing at the intersection of technology, power, and democracy. An award-winning science fiction author, journalist, and digital rights activist, he is the author of dozens of books — including the recent nonfiction work Enshittification: Why Everything Suddenly Got Worse and What to Do About It (read an excerpt here) — and a longtime advocate for an open, repairable, and more humane internet. He works with the Electronic Frontier Foundation, writes the daily blog Pluralistic, and teaches and researches technology policy at institutions including Cornell, MIT, and the University of North Carolina.

What’s shifted in Doctorow’s thinking isn’t just a growing catalog of bad tech behavior. It’s a clearer diagnosis of the conditions that allow those behaviors to win. When competition, regulation, labor power, and interoperability are weakened or removed, the worst incentives inside companies stop being fringe ideas and start becoming the dominant strategy.

And that dynamic doesn’t stay contained within the tech sector. It shows up in environmental destruction and e-waste, in fragile infrastructure and locked-down clean energy systems, in the erosion of worker power, and in the steady narrowing of democratic choice. In this conversation, Doctorow traces how we got here — not as an inevitability, but as the foreseeable result of policy decisions made in living memory — and what it would take to rebuild the collective power needed to change course.

Bioneers: A lot of people talk about “greedy tech companies” or “bad CEOs.” That’s not quite how you frame the problem. What do you think actually changed?

Cory Doctorow: It’s not unusual for the leadership of a company to be greedy. That’s always been true. What’s changed is that firms can now be openly abusive and still thrive. In fact, they can often make more money by making their products worse.

That’s the part people should really be alarmed by. In a healthy system, bad behavior is supposed to be kept in check by competition, regulation, workers pushing back, or customers walking away. What we’ve done over the last several decades is remove those sources of discipline, one by one.

So instead of obsessing over whether individual executives are uniquely awful, which, frankly, is beside the point, we should be looking at the policies and enforcement failures that let this behavior win. When companies no longer fear competitors, regulators, labor pressure, or interoperability, the worst incentives rise to the top. And that’s how you end up with products that are clearly not fit for purpose, but are still wildly profitable.

Bioneers: You coined the term “enshittification” to describe what happens to platforms over time. What do you mean by that?

Cory: I’ve been doing digital rights work for a long time, and a big part of the job is coming up with language people can actually remember: metaphors, framing devices, sometimes slightly rude words. “Enshittification” started almost as a joke, but it stuck because it names something people instantly recognize.

The pattern itself has three stages.

In the first stage, platforms are good to their users. They offer useful features, generous terms, and a decent experience. The goal is to hook people and lock them in.

Once users are locked in, the platform enters a second stage: It makes things worse for users in order to benefit business customers — advertisers, sellers, publishers — who also become dependent on access to those users and get locked in themselves.

Then comes the third stage. The platform starts extracting value from the business customers, too. Because they can’t easily leave, value is withdrawn from both sides and shoveled upward to executives and shareholders.

This isn’t a conspiracy. No one has to twirl a mustache in a dark room. It’s a predictable incentive pattern that emerges when escape routes disappear … when there’s no real competition, no meaningful regulation, and no way out. Once discipline is removed, this behavior becomes rational. And that’s why we see it over and over again.

Bioneers: Do you think companies set out to enshittify their products, or does this happen gradually?

Cory: It’s incremental. Firms are always trying to pay as little as possible for their inputs and charge as much as possible for their outputs. That’s capitalism 101.

What normally constrains that behavior is what economists call discipline: competition, regulation, labor pressure, and the risk that customers will leave. In a healthy system, companies try a lot of bad ideas, and most of them fail. You make the product worse, people bail. Regulators intervene. Workers revolt. Or someone invents a better alternative.

What’s changed is that many of those constraints have been stripped away. When companies no longer fear competition or enforcement, tactics that would once have destroyed a business suddenly make sense.

You can think of it as experimentation under different conditions. If a company tries something abusive and it blows up in their face, that becomes a lesson. But if it works — if profits rise and no one can escape — then that behavior spreads. Over time, the worst incentives win, not because companies suddenly became more evil, but because we redesigned the system to reward them.

It’s not unusual for the leadership of a company to be greedy. That’s always been true. What’s changed is that firms can now be openly abusive and still thrive.

Bioneers: Can you point to a concrete example of how this dynamic plays out?

Cory: A really clear example is Google Search.

By 2019, Google had achieved a roughly 90 percent market share. At that point, you can’t grow by getting more users; you already have almost everyone. But Google still needed to show revenue growth to investors, and that created a crisis inside the company.

What came out in the antitrust cases was an internal fight over what to do next. One faction argued for improving search: making it more accurate, more useful, better for users. Another faction proposed something much simpler: make search worse in a way that shows people more ads.

The idea was to stop “one-shotting” queries. Instead of giving you the best answer right away, Google could force you to run multiple searches, which means more chances to shove ads in your face. From a user’s perspective, search gets worse. From a revenue perspective, it works.

And the reason that faction won is straightforward: Users couldn’t easily leave. Google had locked up defaults on browsers, phones, and operating systems. When competition is effectively neutralized, the risk of users fleeing just isn’t credible anymore.

That’s the key point. When a company knows it can’t easily lose its customers, the argument for degrading the product starts to sound not just acceptable, but smart. And once that logic wins internally, everyone else lives with the consequences.

Bioneers: You’ve said this outcome wasn’t inevitable — that it didn’t have to turn out this way. What made it possible?

Cory: If you look back, there are very specific policy decisions made in living memory that set us on this path. One of the biggest was the decision to stop enforcing antitrust law.

Starting in the 1970s, economists and legal thinkers associated with the Chicago School pushed the idea that monopolies should be presumed efficient. The logic was that if one company dominates a market, it must be because it’s just better. Under that framework, breaking up monopolies starts to look perverse, like punishing success.

Fast forward 40 years and, surprise, we have monopolies everywhere.

That has an immediate effect: Companies no longer fear competition, so they can degrade products without worrying that users will leave. But there’s also a second-order effect that’s just as important. Monopoly makes regulatory capture much easier. When a sector collapses into one dominant firm or a small cartel, it’s far easier for those firms to coordinate lobbying, influence regulators, and bend policy to their will.

And this isn’t abstract. Judges, for example, are required to attend continuing education seminars. In one example, some of those seminars — lavish, industry-funded junkets — offered one-sided arguments against antitrust enforcement. We know which judges attended, and we can see how their rulings changed afterward.

This is how systems get baked in. Not through a single dramatic moment, but through a long series of quiet decisions that tilt the playing field until the outcome feels inevitable, even though it never was.

Bioneers: You’ve also focused a lot on anti-circumvention law and the right to repair. Why does that matter so much?

Cory: Anti-circumvention law makes it illegal to modify, fix, or interoperate with devices in ways the manufacturer doesn’t approve of, even if you own the damn thing.

At the simplest level, it blocks repair. When companies can prevent independent fixes or replacement parts, devices become disposable by design. That drives mountains of e-waste and forces people to replace things that could easily be kept running.

But it goes way beyond phones and laptops. This kind of lock-in makes critical systems brittle. We’ve seen it with medical devices, with infrastructure, and with clean tech — solar inverters, batteries, farm equipment — where cloud control and parts pairing determine whether something works at all.

Once everything is connected to the cloud and legally protected from modification, manufacturers gain terrifying power. They can impose subscriptions after the fact, withdraw features, or just decide that something you already bought doesn’t work anymore.

And here’s the part people don’t want to think about: If companies can remotely disable devices, that power doesn’t stay commercial. It becomes political. We’ve already seen equipment shut down from afar. Once that capability exists, it will be used.

When repair and modification are illegal, systems don’t just get more profitable. They get more fragile.

When a company knows it can’t easily lose its customers, the argument for degrading the product starts to sound not just acceptable, but smart. And once that logic wins internally, everyone else lives with the consequences.

Bioneers: When you talk about “fixing” this system, what does that look like in practice?

Cory: No one is going to repeal the laws of physics. Things will break. The question is whether we’re allowed to fix them.

This isn’t about making executives nice people. It’s about restoring the conditions that punish bad behavior. When competition, interoperability, repair, and enforcement are real, the worst tactics stop working.

Take Adobe’s move to Creative Cloud. People woke up one day and found they had to pay a monthly fee just to access colors they’d already used in their own work. Files broke. Nothing improved; it just turned into a tollbooth. In a functional system, that would create an opening for competitors to step in and siphon users away.

Same with paywalled hardware features like cars where part of the battery you already bought is locked behind a subscription. That shit only works when people can’t leave and when no one is allowed to build tools that undo it.

When escape routes exist, internal power dynamics change. The people arguing to degrade products for short-term profit stop winning every argument. You’ll always have ambitious, ruthless actors inside companies, but they don’t get to dictate outcomes for everyone else.

This isn’t fate, and it’s not the iron laws of economics. We’re living with the foreseeable outcomes of specific policy decisions, made by people who were warned about what would happen and did it anyway.

Bioneers: For people who aren’t tech executives or policymakers, what’s actually within their control?

Cory: The biggest mistake we make is treating this like a personal purity test: thinking that if we make the right consumer choices, the system will magically fix itself. That’s bullshit, especially in monopolized markets.

This is the same lesson people have learned in climate work. You can make thoughtful choices about how you live, but agonizing over them won’t stop wildfires. What matters is acting as part of a polity, not as an isolated consumer.

That means collective action. Advocacy organizations matter. Organized boycotts matter. But a boycott isn’t quietly choosing a different brand at the store. It’s public, collective, and done in solidarity, especially with workers.

The encouraging part is that these struggles are connected. Concentrated corporate power shows up as broken tech, environmental destruction, labor exploitation, and democratic erosion. When you see that connection, you stop feeling alone, and you start building coalitions that can actually change the rules.

Bioneers: Is there anything you want people to hold onto as they leave this conversation?

Cory: Solidarity is the answer.

This isn’t fate, and it’s not the iron laws of economics. We’re living with the foreseeable outcomes of specific policy decisions, made by people who were warned about what would happen and did it anyway.

What’s been taken from us is a sense of collective agency. We’ve been told to act as isolated consumers instead of workers, neighbors, and citizens with shared interests. When that happens, concentrated power rushes in to fill the void.

Rebuilding discipline in markets and technology doesn’t require heroic virtue or perfect knowledge. It requires collective action, enforcement, and the willingness to choose different rules — and different leaders — when the old ones fail. It’s hard work, but it’s not complicated. And it’s still within our power.

Cory Doctorow will be speaking at Bioneers 2026 in March. Learn more and register here.